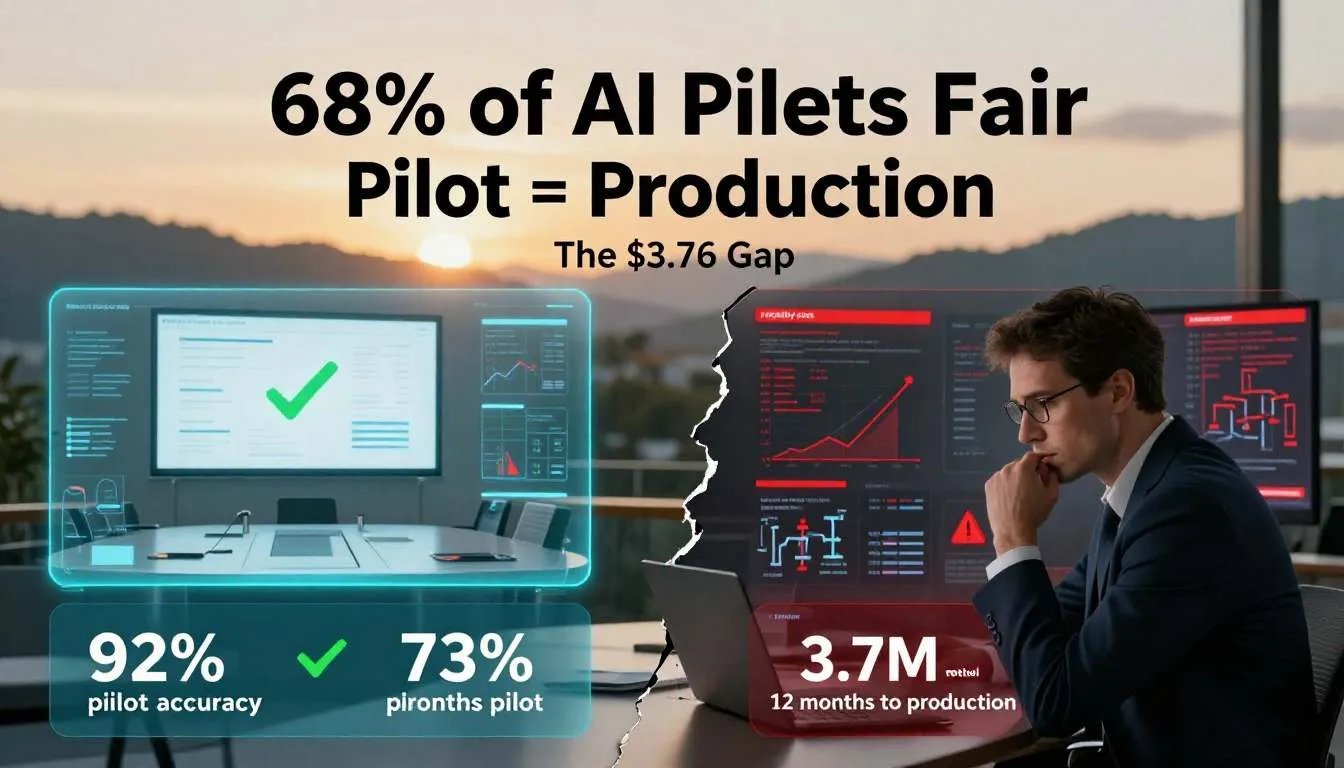

ROI Reality Check: Why 68% of AI Pilots Never Make It to Production (And How to Fix Yours)

Your VP of Innovation just walked into the boardroom with the demo everyone's been waiting for.

The AI pilot looks incredible. Customer service response time cut by 60%. Accuracy better than your best human agents. The live demo works flawlessly.

Everyone's impressed. You approve the budget to scale it company-wide.

Three months later, you're in a very different meeting.

The AI system crashes twice daily. It costs 4x what the pilot suggested. Your ops team can't maintain it. Customer satisfaction is down because the AI gives inconsistent responses. Your CFO is asking pointed questions about the $2.3M you've spent with nothing to show for it.

Welcome to the AI pilot-to-production gap—the graveyard where 68% of AI initiatives go to die.

Here's what nobody tells you during those shiny demos: a working AI pilot and a production-ready AI system are two completely different things. The gap between them isn't technical—it's economic, operational, and organizational.

And unless you understand the real economics of Scalable AI ROI before you write the next check, you're about to learn an expensive lesson that hundreds of companies learned in 2024 and 2025.

The good news? The companies that figured this out are seeing genuine returns. Real AI Business Case Studies 2026 show 10-40x ROI when done right. The difference isn't the technology—it's the approach.

The Problem: The Pilot Trap That's Costing Companies Millions

Let me be blunt about what's happening across the enterprise AI landscape.

You've been sold a fantasy. The consulting firms, the AI vendors, the innovation teams—they all have an incentive to make pilots look successful. And pilots are successful. In controlled environments. With handpicked data. With dedicated engineering support. With unlimited compute budgets.

Then you try to scale it.

The Three Lies Your AI Pilot Is Telling You

Lie #1: "If It Works in the Pilot, It'll Work at Scale"

Your pilot processes 1,000 customer inquiries with 92% accuracy. Beautiful.

Production needs to handle 50,000 inquiries daily, with new edge cases every hour, from customers using language your pilot never saw, asking about products that didn't exist when you trained the model.

Pilot accuracy: 92%

Production accuracy after 30 days: 73%

Why: Model drift, data distribution shift, edge cases, adversarial inputs

The reality: Pilots run on best-case scenarios. Production runs on reality.

Lie #2: "Look at the Cost Savings!"

Your pilot analysis shows AI can handle customer service inquiries at $0.50 per interaction versus $8 for human agents.

ROI projection: 94% cost reduction!

But that calculation missed:

- MLOps infrastructure costs ($180K annually)

- Model retraining pipeline ($40K quarterly)

- Monitoring and maintenance (1.5 FTEs = $220K)

- Escalation to humans for failed cases (still 20% of volume)

- Integration costs with existing systems ($300K one-time)

Real cost per interaction: $2.80

Savings vs. human: 65% (not 94%)

Still valuable, but radically different economics

Lie #3: "We Can Deploy This Next Quarter"

Your innovation team built the pilot in 3 months. Impressive.

Timeline to production-ready deployment:

- Infrastructure setup: 6-8 weeks

- Security and compliance review: 4-6 weeks

- Integration with existing systems: 8-12 weeks

- Training operational staff: 4-6 weeks

- Phased rollout with monitoring: 12-16 weeks

Total: 9-12 months minimum

And that's if everything goes smoothly. Which it won't.

The CFO's Nightmare: The Hidden Cost Structure

Let's talk about the economics nobody discusses during pilot presentations.

Pilot costs (what you approved):

- Model development: $150K

- Data preparation: $50K

- Infrastructure: $20K

- 3-month timeline

Total: $220K

Production costs (what you actually pay):

Year 1:

- Infrastructure (cloud compute, storage, APIs): $280K

- MLOps platform and tools: $180K

- Model monitoring and maintenance: $160K

- Integration and deployment: $300K

- Training and change management: $120K

- Ongoing model improvement: $200K

- Buffer for unexpected issues: $150K

Total: $1.39M (not including the $220K pilot)

Years 2-3:

- Annual infrastructure: $350K (scales with usage)

- Maintenance and improvement: $280K annually

- Staff costs (2-3 dedicated FTEs): $450K annually

Three-year total cost of ownership: $3.7M

Now, did anyone show you those numbers during the pilot demo?

What Happens When You Ignore This Reality

I've seen the pattern repeatedly:

Month 1-3: Successful pilot, glowing reports, executive enthusiasm

Month 4-6: Budget approved, scaling begins, first issues emerge

Month 7-12: Costs balloon, performance degrades, finger-pointing starts

Month 13-18: Project quietly shelved, "AI initiative" becomes taboo

Month 19-24: New leadership proposes "doing AI right this time"

Result: Millions spent. Zero production value. Organizational skepticism about AI that lasts years.

The companies winning? They started with production economics in mind, not pilot metrics.

The Solution: The Production-First AI Framework

Let me show you how companies are actually achieving Scalable AI ROI—the frameworks that work when the demo ends and reality begins.

The Three-Phase Reality-Check Framework

Forget traditional pilot-to-production paths. They're designed to fail. Here's what actually works:

Phase 1: The Honest Economic Model (Week 1-2)

Before you build anything, model the real economics.

The Cost-Per-AI-Task Calculator:

True Cost per Task =

(Infrastructure + MLOps + Maintenance + Labor + Overhead)

÷ Number of Tasks Processed

Target Cost < (Human Equivalent × 0.6)

If not, either:

1. Don't automate yet

2. Choose different task

3. Redesign approach

Example: Customer Service Automation

Human baseline:

- Cost per inquiry: $7.50

- Volume: 40,000 monthly

- Monthly cost: $300K

AI system (realistic numbers):

- Infrastructure: $25K/month

- MLOps platform: $8K/month

- Monitoring/maintenance: $12K/month

- Human oversight (15% escalation): $35K/month

- Model improvement: $10K/month

Total: $90K/month

Cost per inquiry: $2.25

Savings: 70% ($210K/month)

Annual ROI: $2.52M savings on $780K investment = 323% first-year return

This is honest math. Start here.

Key questions to answer before proceeding:

- What's the Cost per AI Task at scale? (Not in pilot—at full production volume)

- What's the comparable human cost? (Fully loaded, not just salary)

- What's the realistic accuracy target? (Not pilot accuracy—production accuracy with drift)

- What's the maintenance cost? (Retraining, monitoring, improvement)

- What's the integration cost? (Getting this into existing workflows)

If you can't answer these five questions with data-backed numbers, you're not ready to scale.

Phase 2: The Operational Readiness Assessment (Week 3-4)

Most AI projects fail not because the technology doesn't work, but because the organization can't operate it.

The MLOps for Business Readiness Checklist:

Infrastructure:

- Cloud or on-prem infrastructure scaled for production load (not pilot load)

- API rate limits and SLAs that match business requirements

- Backup and disaster recovery plans

- Security and compliance frameworks approved

- Cost monitoring and automatic scaling rules

Operational Team:

- Designated owner who understands both AI and business operations

- On-call rotation for AI system issues (yes, like any production system)

- Escalation paths when AI fails (will happen—plan for it)

- Training for operational staff who'll work alongside AI

- Change management plan for affected teams

Monitoring and Maintenance:

- Real-time performance dashboards tracking key metrics

- Automated alerts for accuracy degradation

- Retraining pipeline (how often, with what data, who approves)

- A/B testing framework for model improvements

- Feedback loops from end users

Integration:

- APIs documented and tested at production scale

- Fallback mechanisms when AI is unavailable

- Data pipelines for continuous model feeding

- Version control and rollback procedures

- Integration testing with all dependent systems

If more than 30% of items are unchecked, delay production rollout.

Real example from a Series C SaaS company:

What they did right:

- Built operational dashboards before deploying AI

- Hired ML engineer to own system full-time

- Created detailed runbooks for common issues

- Established weekly review of model performance

What they initially missed:

- Didn't plan for retraining frequency

- Underestimated customer support training needs

- No fallback when API hit rate limits

Cost of mistakes: 3-week unplanned outage, $180K in customer credits

Lesson: Operational readiness isn't optional.

Phase 3: The Phased Rollout Strategy (Month 1-6)

Never flip the switch on day one. Ever.

The 10-25-50-100 Rollout:

10% Rollout (Month 1):

- Deploy to least risky segment (internal users, small customer subset, or controlled geography)

- Monitor intensively—check metrics daily

- Gather operational learnings

- Decision gate: Are metrics meeting projections? Is operations sustainable? Proceed or pause?

25% Rollout (Month 2-3):

- Expand to broader but still controlled segment

- Implement operational improvements from 10% phase

- Validate economics at increased scale

- Decision gate: Cost per task still within targets? Quality maintained? Proceed or pause?

50% Rollout (Month 4-5):

- Now handling significant production load

- True stress testing of infrastructure and operations

- Edge cases and data drift become apparent

- Decision gate: System stable under real-world diversity? Maintain or improve economics? Proceed or pause?

100% Rollout (Month 6+):

- Full production deployment

- Continuous monitoring and improvement

- Quarterly business review of ROI vs. projections

Critical: Each gate requires CFO and operational leadership approval, not just tech team.

Measuring What Actually Matters: The Scalable AI ROI Scorecard

Forget vanity metrics. Here's what CEOs and CFOs should demand:

Financial Metrics:

- Cost per AI Task (fully loaded, measured monthly)

- Human-equivalent cost baseline (for comparison)

- Total Cost of Ownership (cumulative spend vs. plan)

- Payback Period (when does cumulative savings exceed investment?)

- Net Present Value (3-year view)

Operational Metrics:

- Production accuracy (not pilot accuracy—real-world performance)

- Task completion rate (% handled without human intervention)

- Mean time to resolve issues (when things break, how fast do you recover?)

- Model drift rate (how fast does performance degrade?)

- Retraining frequency required (operational burden)

Business Impact Metrics:

- Customer satisfaction (AI should improve this, not degrade it)

- Employee productivity (for humans working alongside AI)

- Time to value (how quickly does AI start adding value?)

- Scalability ceiling (at what volume do economics break?)

Dashboard these monthly. No exceptions.

Real AI Business Case Studies 2026: What Actually Worked

Let me show you companies that got this right and the specific decisions that made the difference.

Case Study 1: Mid-Market Manufacturing—Quality Control AI

Company: 800-employee precision manufacturing

Use case: Visual inspection for product defects

Investment: $1.2M over 18 months

What they did right:

- Calculated Cost per AI Task upfront: $0.18 vs. $2.40 human inspection

- Deployed to single production line first (10% rollout lasted 4 months)

- Built retraining pipeline before scaling—model refreshed weekly with new defect types

- Kept human inspectors for edge cases and model training

Results:

- Year 1 ROI: -15% (investment phase)

- Year 2 ROI: +240% (scaled to 50% of production)

- Year 3 projection: +380% (full deployment)

- Key metric: Defect detection improved from 94% (human) to 98.7% (AI+human hybrid)

Critical success factor: "We treated AI as augmenting inspectors, not replacing them. Inspectors became AI trainers and handled edge cases. This made organizational adoption smooth and kept improving the model."

Case Study 2: Financial Services—Document Processing

Company: Regional bank, 3,000 employees

Use case: Loan document verification and extraction

Investment: $2.8M over 24 months

What they did right:

- Honest economic model: $4.20 per document (AI) vs. $18 (human processing)

- Built MLOps for Business infrastructure before scaling: automated retraining, monitoring, compliance logging

- Phased rollout over 14 months: started with refinance applications (simpler documents), expanded to complex commercial loans

- Invested in change management: trained 40 loan officers on working with AI

Results:

- 18-month payback period (faster than projected 24 months)

- Processing time: 4 days → 6 hours (83% reduction)

- Accuracy: 96.3% (human baseline: 94.1%)

- Volume capacity: 3x increase without headcount growth

Critical success factor: "We over-invested in MLOps infrastructure early. Cost $400K upfront, but saved us from the operational chaos competitors faced. Our system just runs."

Case Study 3: E-Commerce—Dynamic Pricing

Company: $500M revenue online retailer

Use case: Real-time pricing optimization

Investment: $3.5M over 18 months

What they did wrong initially:

- Pilot showed 8% margin improvement on 100 SKUs

- Scaled to 10,000 SKUs without operational plan

- Model started making erratic pricing decisions

- Customer complaints spiked

- Had to roll back after 6 weeks

What they fixed:

- Rebuilt with operational constraints: max 5% price change per day, human approval for changes >15%

- Implemented monitoring dashboards tracking margin, volume, competitor pricing, and customer feedback

- Created dedicated ML ops team (3 FTEs)

- Rolled out gradually: 1K SKUs → 5K SKUs → 10K SKUs over 9 months

Results after relaunch:

- Margin improvement: 4.2% (not 8%, but sustainable)

- Revenue impact: +$21M annually

- ROI: 600% over 3 years

- Key learning: "Sustainable 4% beats unsustainable 8%. We optimized for operational stability."

Critical success factor: "We learned the hard way that pilot performance doesn't translate directly. Now we assume pilots overestimate benefits by 30-50% and plan accordingly."

The MLOps for Business Essentials (Non-Technical Leader's Guide)

You don't need to become an AI engineer. But you do need to understand these operational realities:

What MLOps Actually Means:

Think of MLOps as DevOps for AI. Just like software needs deployment pipelines, version control, and monitoring, AI models need:

- Automated retraining pipelines (models degrade over time—this fixes them automatically)

- Performance monitoring (real-time dashboards showing if AI is working correctly)

- A/B testing frameworks (test model improvements before rolling them out)

- Version control (roll back to previous model if new one performs worse)

- Data quality checks (garbage in = garbage out—automate data validation)

Why this matters to CFOs:

Without MLOps, every model update requires manual work = expensive and slow.

With MLOps, model improvements are automatic and continuous = scalable.

Budget allocation rule of thumb:

- Model development: 30% of AI budget

- MLOps infrastructure: 25% of AI budget

- Operations and maintenance: 45% of AI budget

Most companies do this backwards: 70% on development, 10% on operations. Then wonder why production fails.

The Decision Framework: Build, Buy, or Wait?

Not every AI opportunity should be pursued. Here's how to evaluate:

Build in-house if:

- The use case is core to competitive advantage

- You have repeatable high-volume tasks (economics scale)

- You can hire/train 2-3 dedicated ML engineers

- You're willing to invest in MLOps infrastructure ($200K-500K)

- Payback period is under 24 months at realistic projections

Buy (vendor solution) if:

- Use case is common across industries (CRM, customer service, document processing)

- You lack internal ML expertise

- You need deployment in under 6 months

- The vendor has proven case studies in your industry

- Total cost is still <60% of human equivalent

Wait if:

- Cost per AI Task exceeds 70% of human cost (insufficient savings)

- Operational team isn't ready (infrastructure, monitoring, processes)

- Data quality is poor (will need 6+ months data cleanup)

- Organizational resistance is high (will undermine adoption)

- Use case is low-volume (<1,000 tasks monthly—fixed costs kill ROI)

Be honest. Waiting is often the right answer.

The Hard Truth About AI ROI

Here's what every CEO and CFO needs to hear:

Most AI initiatives shouldn't proceed to production. Not because AI doesn't work, but because the economics don't work for that specific use case at that specific company at this specific time.

The successful companies? They killed 60-70% of their AI pilots after rigorous economic analysis. The remaining 30-40% delivered 10-40x returns because resources were concentrated on viable opportunities with operational readiness.

The struggling companies? They tried to scale everything. Spread resources thin. Ended up with a portfolio of half-working AI systems that cost more to maintain than the value they deliver.

Scalable AI ROI isn't about having the best AI. It's about choosing the right problems and executing disciplined, operationally-sound deployments.

The Questions Your Board Should Be Asking

If you're evaluating an AI initiative, these questions cut through the hype:

-

"What's the fully-loaded Cost per AI Task at production scale?"

(If they can't answer with data, it's not ready.) -

"What happens when the AI fails? How do we recover?"

(Operational resilience matters more than accuracy.) -

"Who owns this in production? What's their on-call plan?"

(If no one's responsible, it will fail.) -

"What's the retraining plan and frequency?"

(Models drift—this isn't optional.) -

"What's our rollback plan if this degrades business metrics?"

(You need an exit strategy.) -

"How does this compare to human cost in Year 1, 2, and 3?"

(Long-term economics matter more than first-year savings.) -

"What's the payback period using conservative assumptions?"

(If it's over 24 months, scrutinize harder.)

If your team can't answer these confidently with data, pause the initiative until they can.

Your 90-Day Action Plan: From Pilot Purgatory to Production Reality

Stop celebrating pilot success. Start validating production viability.

Month 1: Economic Reality Check

- Week 1: Calculate true Cost per AI Task for all active pilots

- Week 2: Model 3-year TCO including MLOps, maintenance, operations

- Week 3: Rank pilots by realistic ROI (conservative assumptions)

- Week 4: Kill bottom 50%, double down on top 20%

Month 2: Operational Readiness

- Week 1: Assess MLOps infrastructure gaps (use checklist from this article)

- Week 2: Identify owners and build operational teams

- Week 3: Create monitoring dashboards and alert systems

- Week 4: Document runbooks and escalation procedures

Month 3: Measured Rollout

- Week 1-2: Deploy top-ranked initiative at 10% scale

- Week 3: Monitor intensively, gather operational learnings

- Week 4: Decision gate—proceed to 25% or pause and fix issues

By day 90, you should have:

- Honest economics for all AI initiatives

- Operational infrastructure for production deployment

- One AI system in phased production rollout

- Data-driven decision framework for future investments

That's it. No moonshots. No innovation theater. Just disciplined execution toward Scalable AI ROI.

The Bottom Line: Production Is Where ROI Lives

Pilots are science experiments. Production is business.

The gap between them isn't technical—it's economic, operational, and organizational.

The companies achieving genuine AI Business Case Studies 2026 success stories didn't have better technology. They had better economic models, operational discipline, and organizational readiness.

They understood that Scalable AI ROI comes from boring fundamentals:

- Honest cost modeling

- Operational infrastructure

- Phased rollouts with decision gates

- Continuous monitoring and improvement

- Disciplined resource allocation

Not sexy. But it works.

While your competitors are celebrating pilot demos and writing press releases about "AI transformation," you can be quietly deploying production AI systems that actually deliver value.

The choice is yours: impressive pilots that go nowhere, or operational AI systems that compound value over years.

One generates LinkedIn posts. The other generates profit.

Choose wisely.